Customer support at Amazon

Sep. 8th, 2017 02:16 pmWe are using Amazon SES to send over 10M emails per month.

This is cheap and reliable service, and overall I like it.

However customer support is poor.

Recently, Amazon SES team removed 2 important graphs from their SES dashboard: "Bounce rate" and "Complaints".

Apparently, Amazon SES team decided that customers would be happier to build their own reports on buggy CloudWatch instead of using already prepared report.

I complained that "development" on AWS forum, and here is the reply:

https://forums.aws.amazon.com/thread.jspa?messageID=803845#803845

The proposed solution - does NOT work.

To add an insult to the injury, AWS forum does not allow me to reply yet with a message: "Your message quota has been reached. Please try again later."

Here is my forum reply that I am NOT able to post on AWS forum yet:

Accidentally, few days ago I received email from SendGrid rep that suggested me it may be a time to switch away from Amazon SES to SendGrid in order to get a superior customer support.

I asked SendGrid rep what kind of support I may need, got no clear reply. But Amazon SES team seems to be happy to prove their competitors right.

See also: https://dennisgorelik.dreamwidth.org/tag/amazon+ses

This is cheap and reliable service, and overall I like it.

However customer support is poor.

Recently, Amazon SES team removed 2 important graphs from their SES dashboard: "Bounce rate" and "Complaints".

Apparently, Amazon SES team decided that customers would be happier to build their own reports on buggy CloudWatch instead of using already prepared report.

I complained that "development" on AWS forum, and here is the reply:

https://forums.aws.amazon.com/thread.jspa?messageID=803845#803845

The proposed solution - does NOT work.

To add an insult to the injury, AWS forum does not allow me to reply yet with a message: "Your message quota has been reached. Please try again later."

Here is my forum reply that I am NOT able to post on AWS forum yet:

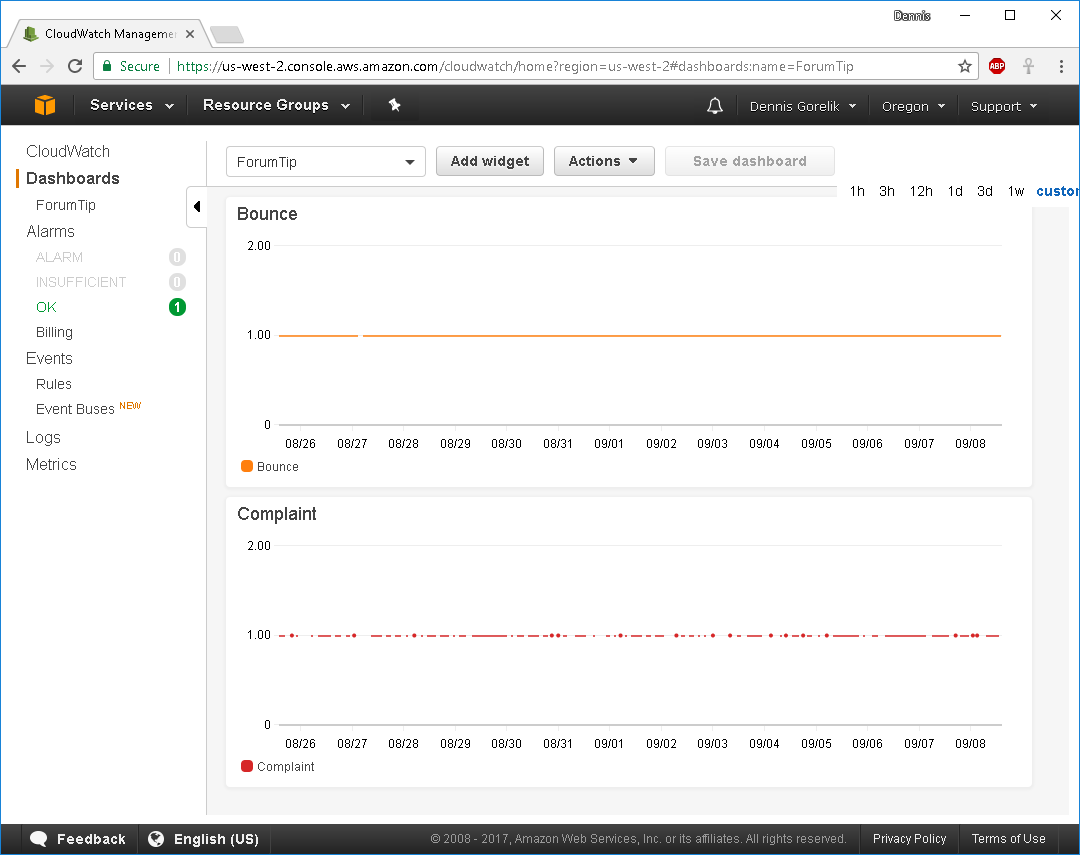

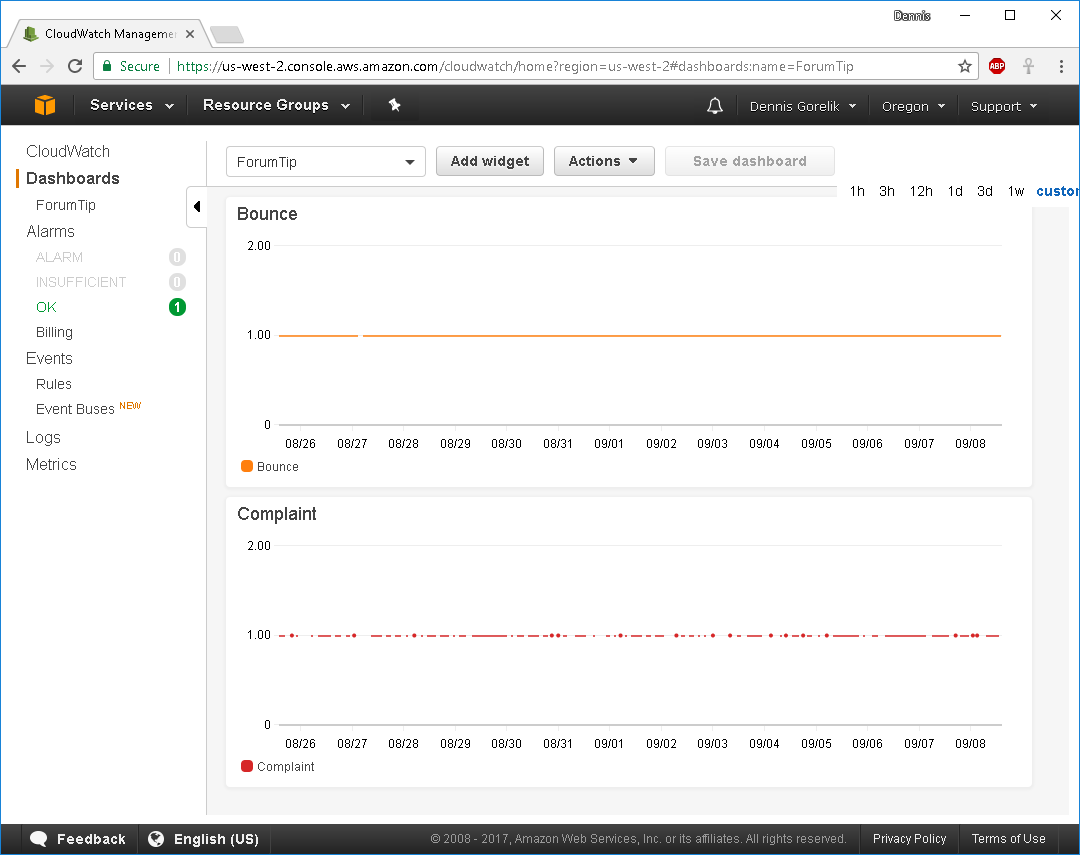

=============== @AWSBrentM 1) Thank you for trying to help. I followed your steps and this produced two graphs to me. Both graphs have constant horizontal lines at 1.00 level. So, pretty much, both graphs are totally useless. https://us-west-2.console.aws.amazon.com/cloudwatch/home?region=us-west-2#dashboards:name=ForumTip Should I use instead different "metrics" instead of "Bounce" and "Complaint"? "Reputation.BounceRate" and "Reputation.ComplaintRate" perhaps? 2) Do you know why Amazon SES Team decided to make us (its customers) to jump through the hoops of creating these awkward custom reports instead of just keeping already existing functionality? This exercise is time-consuming, and while I am troubleshooting cloudwatch app - I am NOT building my own app that pays the bills for all of us. 3) "Your message quota has been reached. Please try again later. " ... this is my second message (and the first message in ~12 hours). It looks like Amazon SES Team is not eager about receiving feedback... ===============

Accidentally, few days ago I received email from SendGrid rep that suggested me it may be a time to switch away from Amazon SES to SendGrid in order to get a superior customer support.

I asked SendGrid rep what kind of support I may need, got no clear reply. But Amazon SES team seems to be happy to prove their competitors right.

See also: https://dennisgorelik.dreamwidth.org/tag/amazon+ses